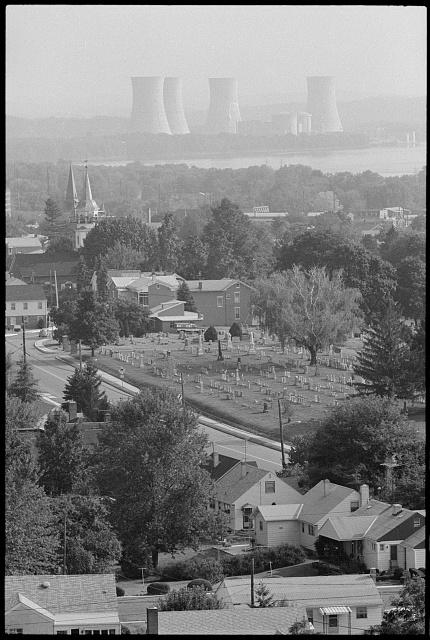

If you are of a certain age, you may remember where you were on March 28, 1979. That’s when the partial meltdown at the Three Mile Island nuclear power plant began. I was in Maryland at the time, representing Brookhaven National Laboratory at a meeting of solar energy researchers sponsored by the recently created U.S. Department of Energy. As we were only about 50 miles from that plant, we were concerned, but the meeting went on.

I was in no position to make or even influence energy policy, but as a fully involved researcher I had a pretty good perch to see much of what was going on. Solar energy was very much in the political spotlight. People were sick and tired of waiting in long lines to gas up their cars, and they were afraid they might literally freeze in the dark come next winter. They needed an answer, and they wanted it now.

The debate on the continuing energy crises had by then crystallized into two factions. On one side were analysts in government and industry who maintained that nuclear energy would be an essential part of the solution. On the other side were most environmentalists, for whom energy from the atom was an abomination.

The two sides didn’t like each other very much, but they did agree that the world was rapidly running out of oil and natural gas. Some economists argued that higher prices would encourage more drilling, which would alleviate the shortage, but they were in the minority. The prevailing view was that there was only so much of these fuels available, and all the drilling in the world wouldn’t greatly change that. What they didn’t envision was technical innovations such as fracking, which unlocked huge amounts of hydrocarbon fuels that had long been considered unrecoverable. Ironically, the one thing they agreed on turned out to be wrong.

They disagreed on just about everything else, including how much energy America was going to need in the coming years. The government planners assumed that to grow the economy we would need to increase our use of energy in direct proportion. The environmentalists maintained that it was possible to improve energy efficiency in our industry, transportation, and buildings so that the economy could grow while using less energy.

On this point the environmentalists were correct. Even after accounting for inflation, we now use less than half as much energy to produce a given amount of goods and services as we did in 1979.

The government analysts pressed their claim that nuclear power was the best way to close the energy gap. True, coal was still plentiful, but they argued that coal production couldn’t or shouldn’t be ramped up sufficiently to close the gap. The hidden agenda was that nuclear waste was the main source of material to make bombs.

Environmentalists pushed back mightily, and they had a plausible strategy. They reasoned that if the nuclear dragon could be killed, America would be forced onto the “soft path” of renewable energy. To make the strategy credible, though, they needed something to replace nuclear power, not in some far future, but right away. That something was energy from the sun.

Unfortunately, existing solar technologies were not ready to take on the task assigned to them. The solar panels we use today, which make electricity using photovoltaic cells, were then far too expensive to be put forward as an immediate alternative. The other kind, which use solar energy to heat water or air, were sold to Congress as if they were mature technology ready to be installed on everyone’s roofs. That misperception helped to ensure that they would never be made affordable enough to achieve meaningful market penetration, even with substantial government subsidies.

Environmental activists didn’t kill nuclear power outright, but they did hobble it. Of the 250 nuclear power reactors that had been planned, only 100 were completed. That this reduction in nuclear capacity would keep the coal industry alive and exacerbate climate change should have been obvious, but climate change was in the far future, and the nuclear issue was before the public immediately. A nuanced discussion of the issue among environmentalists became virtually impossible. Any suggestion that coal was more dangerous than nuclear power was treated as heresy.

The upshot was that fossil fuels have remained the primary source of energy in our economy right up to the present day.

Even though the solar program I was working on failed to achieve its objectives, there were spinoffs. My group at Brookhaven was instrumental in reviving the concept of geothermal heat pumps, which the industry had largely abandoned in the 1950s. America now has more than 1.7 million of these highly efficient installations. On a larger scale, the Department of Energy’s energy efficiency research is partly responsible for the large and welcome decrease in the energy intensity of our economy. The research it sponsored on photovoltaic solar panels and wind turbines helped spur huge cost reductions in the last two decades. Solar and wind energy are now truly competitive in the marketplace.

Renewable energy has a bright future. If problems of electricity distribution and storage can be solved, that future will be even brighter. That’s encouraging, given the urgent challenge that climate change presents. If expanding renewable resources permit us to close existing power plants, then coal, not nuclear, should be tops on the hit list. Nuclear energy is as close to carbon-free as solar or wind power. Coal, on the other hand, emits more carbon dioxide than any other fuel. Coal waste is hugely toxic, and it lasts forever.

As the future unfolds, when the critical environmental threat is climate change, we’re sure to encounter some surprises. We need to make sure that we don’t adopt strategies based on outdated assumptions, wishful thinking, and unwillingness to question accepted dogma. Such strategies are likely to do far more harm than good.

John Andrews has a Ph.D. in physics. He lives in Sag Harbor.